Download (right-click, save target as ...) this page as a jupyterlab notebook Lab33

Laboratory 33: KNN Classification

LAST NAME, FIRST NAME

R00000000

ENGR 1330 Laboratory 33 - In-Class and Homework

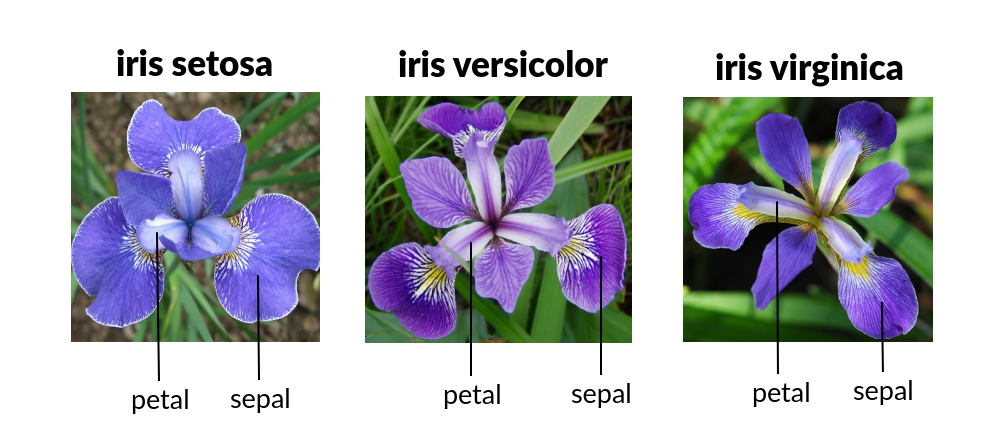

Example: Iris Plants Classification

This is a well known problem and database to be found in the pattern recognition literature. Fisher's paper is a classic in the field and is referenced frequently to this day. The Iris Flower Dataset involves predicting the flower species given measurements of iris flowers.

The Iris Data Set contains information on sepal length, sepal width, petal length, petal width all in cm, and class of iris plants. The data set contains 3 classes of 50 instances each, where each class refers to a type of iris plant. Hence, it is a multiclass classification problem and the number of observations for each class is balanced.

Let's use a KNN model in Python and see if we can classifity iris plants based on the four given predictors.

Acknowledgements

- Fisher,R.A. "The use of multiple measurements in taxonomic problems" Annual Eugenics, 7, Part II, 179-188 (1936); also in "Contributions to Mathematical Statistics" (John Wiley, NY, 1950).

- Duda,R.O., & Hart,P.E. (1973) Pattern Classification and Scene Analysis. (Q327.D83) John Wiley & Sons. ISBN 0-471-22361-1. See page 218.

- Dasarathy, B.V. (1980) "Nosing Around the Neighborhood: A New System Structure and Classification Rule for Recognition in Partially Exposed Environments". IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. PAMI-2, No. 1, 67-71.

- Gates, G.W. (1972) "The Reduced Nearest Neighbor Rule". IEEE Transactions on Information Theory, May 1972, 431-433.

- See also: 1988 MLC Proceedings, 54-64. Cheeseman et al's AUTOCLASS II conceptual clustering system finds 3 classes in the data.

Load some libraries:

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

import sklearn.metrics as metrics

import seaborn as sns

%matplotlib inline

Read the dataset and explore it using tools such as descriptive statistics:

# Read the remote directly from its url (Jupyter):

url = "https://archive.ics.uci.edu/ml/machine-learning-databases/iris/iris.data"

# Assign colum names to the dataset

names = ['sepal-length', 'sepal-width', 'petal-length', 'petal-width', 'Class']

# Read dataset to pandas dataframe

dataset = pd.read_csv(url, names=names)

dataset.tail(9)

dataset.describe()

Split the predictors and target - similar to what we did for logisitc regression:

X = dataset.iloc[:, :-1].values

y = dataset.iloc[:, 4].values

Then, the dataset should be split into training and testing. This way our algorithm is tested on un-seen data, as it would be in a real-world application. Let's go with a 80/20 split:

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

#This means that out of total 150 records:

#the training set will contain 120 records &

#the test set contains 30 of those records.

It is extremely straight forward to train the KNN algorithm and make predictions with it, especially when using Scikit-Learn. The first step is to import the KNeighborsClassifier class from the sklearn.neighbors library. In the second line, this class is initialized with one parameter, i.e. n_neigbours. This is basically the value for the K. There is no ideal value for K and it is selected after testing and evaluation, however to start out, 5 seems to be the most commonly used value for KNN algorithm.

from sklearn.neighbors import KNeighborsClassifier

classifier = KNeighborsClassifier(n_neighbors=5)

classifier.fit(X_train, y_train)

The final step is to make predictions on our test data. To do so, execute the following script:

y_pred = classifier.predict(X_test)

As it's time to evaluate our model, we will go to our rather new friends, confusion matrix, precision, recall and f1 score as the most commonly used discrete GOF metrics.

from sklearn.metrics import classification_report, confusion_matrix

print(confusion_matrix(y_test, y_pred))

print(classification_report(y_test, y_pred))

cm = pd.DataFrame(confusion_matrix(y_test, y_pred))

sns.heatmap(cm, annot=True)

plt.title('Confusion matrix', y=1.1)

plt.ylabel('Predicted label')

plt.xlabel('Actual label')

What if we had used a different value for K? What is the best value for K?

One way to help you find the best value of K is to plot the graph of K value and the corresponding error rate for the dataset. In this section, we will plot the mean error for the predicted values of test set for all the K values between 1 and 50. To do so, let's first calculate the mean of error for all the predicted values where K ranges from 1 and 50:

error = []

# Calculating error for K values between 1 and 50

# In each iteration the mean error for predicted values of test set is calculated and

# the result is appended to the error list.

for i in range(1, 50):

knn = KNeighborsClassifier(n_neighbors=i)

knn.fit(X_train, y_train)

pred_i = knn.predict(X_test)

error.append(np.mean(pred_i != y_test))

The next step is to plot the error values against K values:

plt.figure(figsize=(12, 6))

plt.plot(range(1, 50), error, color='red', linestyle='dashed', marker='o',

markerfacecolor='blue', markersize=10)

plt.title('Error Rate K Value')

plt.xlabel('K Value')

plt.ylabel('Mean Error')

Exercise¶

Everything above we did in class, but how about classifing a new measurement? Thats your lab exercise!

A framework might be:

- Use the database to train a classifier (done)

- Explore the sturcture of

y_pred = classifier.predict(X_test)This seems like the tool we want. - Once you know how to predict from the classifier, determine the classification of thge following sample

| sepal-length | sepal-width | petal-length | petal-width |

|---|---|---|---|

| 6.9 | 3.1 | 5.1 | 2.3 |