Pandas¶

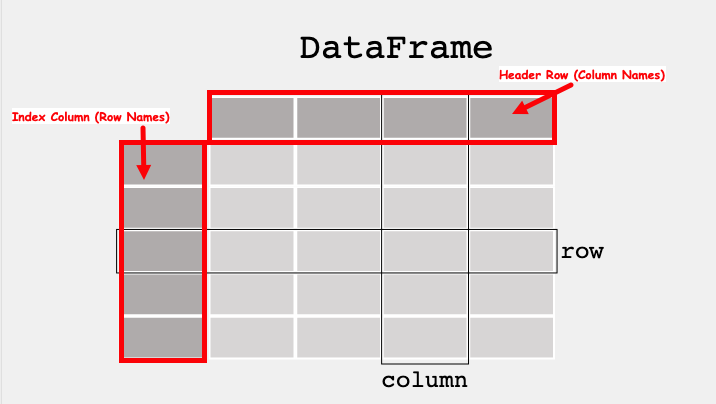

A data table is called a DataFrame in pandas (and other programming environments too).

The figure below from https://pandas.pydata.org/docs/getting_started/index.html illustrates a dataframe model:

Each column and each row in a dataframe is called a series, the header row, and index column are special.

To use pandas, we need to import the module, often pandas has numpy as a dependency so it also must be imported